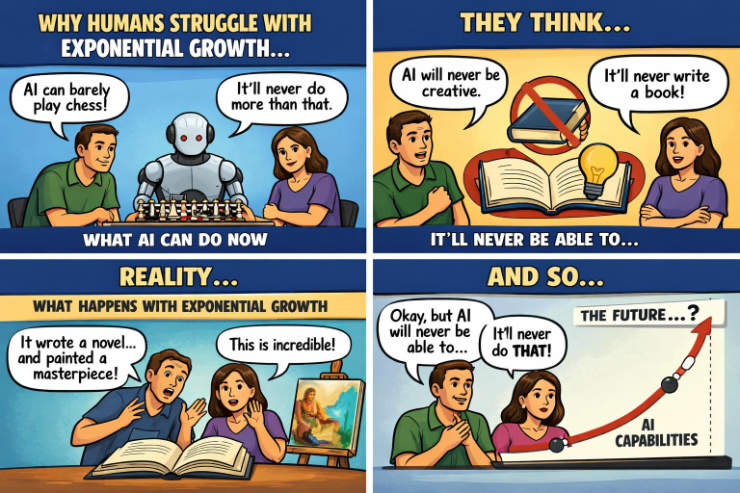

Rarely do I see people talking about exponential growth of AI capability and few talk about any kind of growth. Maybe I’m hanging out with the wrong people? Too many seem to base their predictions on current state of the art with barely a hat tip to the increase in capability we’ve seen in just the past 2 years, or any extrapolation of AI capability into the next 2 years.

Who taught you not to dream? Who destroyed your imagination, little Johnny?

Current state of the art; LLMs

The current “dumb” pattern / probability finding transformer model will be replaced with models already being conceived with some already in alpha and beta stages.

Whatever the platform/mechanism, AI is being used by those pioneers as a tool (for now) to accelerate their progress <– a virtuous circle.

Corporations and governments are sinking billions into the race to develop better AI. Short of running out of money, none of them are stopping their progress. And, of course, some will move slower due to having sunk so much cost in the current paradigm that their bean counters advise milking that structure as long as possible.

Coding by humans – even the best of us – will very soon no longer be practical.

Right now we are still needed for babysitting AI to “build it right”, test, and deploy.

Very soon, AI will handle all three of those functions.

| Model | Estimated Reasoning Capability | Likelihood of Success (Updated) | Predicted Time to Market (Updated) | Key 2025 Developments |

|---|---|---|---|---|

| Model Collaboration Ecosystems | High (cross-domain synthesis) | High | 2025-2026 | Enterprise integrations via open-source ecosystems; AI-enhanced learning case studies. |

| Neuro-Symbolic Integration | High (causal reasoning) | High | 2026-2027 | Five-stage frameworks; hallucination reduction in LLMs; applications in data extraction. |

| Brain-Inspired Computing Models | Medium-High (specialized tasks) | High | 2025-2026 | SNN surveys and benchmarks; reinforcement learning for plasticity; robotics efficiency. |

| Liquid Neural Networks | Medium-High (adaptive control) | Medium-High | 2026-2027 | LFM2 release; NeurIPS advances; edge AI power reductions. |

| Photonic Computing Designs | High (efficiency-focused) | Medium | 2026-2027 | Ultralow-latency processors; 100x speed chips; commercialization hype cycle inclusion. |

| Quantum-Integrated Architectures | High (theoretical potential) | Medium | 2026-2028 | NVIDIA NVQLink; Google roadmap; commercial advantage deployments. |

| Self-Improving AGI Prototypes | High (autonomous refinement) | Medium-Low | 2027+ | AGI predictions for 2026; Hyperon scalable intelligence; ethical alignment discussions. |

| Large Concept Models (New Addition) | High (conceptual coherence) | Medium | 2026+ | SONAR embeddings; multimodal integration; structured outputs beyond tokens. |

Chart details below.

Some detail on recent evolutions

The AI landscape evolved rapidly, with breakthroughs in hardware integration, efficiency-focused designs, and hybrid systems, as documented in reports like the Stanford 2025 AI Index, which notes continued improvements in benchmarks like MMMU and GPQA while highlighting the emergence of multimodal and agentic models. McKinsey’s Global Survey on AI from November 2025 reveals that while most organizations invest in AI, maturity remains low, underscoring the need for practical advancements beyond hype. Gartner’s 2025 Hype Cycle for AI expands beyond generative AI to include photonic computing and neuro-symbolic techniques, signaling a shift toward high-impact emerging technologies. MIT Technology Review’s December 2025 piece on the “great AI hype correction” cautions against overpromising, noting that while LLMs dominated, unfulfilled expectations pushed interest toward alternatives.

Progress in Quantum-Integrated Architectures

Originally pegged for 2028+ with low success likelihood due to qubit instability, 2025 saw notable strides. NVIDIA unveiled a quantum-integrated computing architecture in October, including NVQLink for hybrid processing, enhancing AI optimization tasks. Google’s Quantum AI team outlined a five-stage roadmap in November, focusing on useful applications through AI-driven algorithm discovery. Q-CTRL reported commercial quantum advantage in 2025, with global deployments in error correction and machine learning integration. Bain & Company’s September report estimates up to $250 billion in impact, though gradual, highlighting hardware colocation in supercomputers as a key enabler. Challenges persist in scalability, but these developments suggest revising the timeline to 2026-2028 and likelihood to medium, acknowledging the convergence of AI and quantum for tasks like drug discovery.

Advances in Liquid Neural Networks

The article describes these as dynamic, adaptive networks for real-time control, with a 2027-2028 timeline and medium likelihood. In 2025, Liquid AI released LFM2 in July, doubling performance for edge devices like phones and drones. NeurIPS 2025 showcased efficiency gains, with models optimized for CPUs and NPUs enabling privacy-focused applications. A Science Focus article in November detailed worm-brain-inspired LNNs reducing power use by 10x, making them suitable for robotics. Medium discussions emphasized inherent causality, improving over traditional RNNs in continuous learning. This progress supports bumping the timeline to 2026-2027 and likelihood to medium-high, with expanded sections on multimodal integration and energy efficiency benchmarks.

Updates on Self-Improving AGI Prototypes

Positioned as high-potential but low-likelihood for 2028+, these systems using synthetic data loops advanced in 2025 discourse. Scientific American’s December piece explores steps toward superintelligence, with models like those from Google automating scientific methods for exponential progress. AIMultiple’s analysis of 8,590 predictions forecasts early AGI-like systems by 2026, emphasizing recursive self-improvement. SingularityNET’s Hyperon update in December highlights verifiable computation for scalable intelligence. Forbes’ July forecast outlines AGI-to-ASI pathways, noting 2045 for full recursive enhancement but 2025 prototypes in theorem proving. Ethical debates on alignment grew, suggesting an added section on safety, with timeline adjusted to 2027+ and likelihood to medium-low.

Developments in Neuro-Symbolic Integration

With a 2026-2028 timeline and medium likelihood, 2025 brought foundational analyses. A Medium roadmap proposes five-stage integration for hybrid reasoning. Ultralytics’ November intro emphasizes transparency in context understanding. Singularity Hub in June noted neurosymbolic cycles reducing hallucinations in LLMs. IEEE’s October paper classifies approaches, aiding scalability. Nature’s November study integrates GPT-4 with rule-based systems for auditable extraction. This supports maintaining the timeline but raising likelihood to high, with new subsections on applications in healthcare and legal tech.

Brain-Inspired Computing Models

Focused on spiking neural networks (SNNs) with neuromorphic chips like Intel Loihi, originally 2025-2027 medium. ArXiv’s October survey analyzes SNN designs for energy-efficient object detection. Frontiers in Neuroscience reviews deep learning with SNNs for brain simulation. Neurocomputing’s May paper uses reinforcement learning for synaptic plasticity. CVPR 2025 highlights SNNs in temporal processing. PNAS evolves neural circuits biologically. Advances confirm the timeline, with likelihood to high for specialized tasks like robotics.

Photonic Computing Designs

Low likelihood for 2028+, but 2025 breakthroughs accelerated. Singularity Hub’s December reports a light-powered chip 100x faster than NVIDIA’s A100. ScienceDaily’s October breakthrough enables speed-of-light computation. Q.ANT’s November photonic NPU reduces energy for AI workloads. Nature’s April integrated processor marks hardware advancement. World Economic Forum’s August notes commercialization potential. Suggest shifting timeline to 2026-2027 and likelihood to medium.

Model Collaboration Ecosystems

High likelihood for 2025-2026. McKinsey’s January report on AI workplaces stresses collaborative maturity. Forbes’ May piece on ecosystems fuels AI strategies. Red Hat’s April emphasizes open-source partnerships. Equinix’s October highlights multiplier effects in deployments. Maintain timeline, add enterprise case studies.

Emerging Additions and Broader Trends

Incorporate Large Concept Models (LCMs) for conceptual processing, as per a March 2025 X post, enhancing coherence. Medium’s December lists eight model types beyond LLMs, including world models. Wevolver’s July explores RL and time-series AI. Ahead of AI’s November discusses independent world models. Information Difference’s September covers multi-modal AI.

Key Citations

– [The 2025 AI Index Report | Stanford HAI](https://hai.stanford.edu/ai-index/2025-ai-index-report)

– [The State of AI: Global Survey 2025 – McKinsey](https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai)

– [The 2025 Hype Cycle for Artificial Intelligence Goes Beyond GenAI](https://www.gartner.com/en/articles/hype-cycle-for-artificial-intelligence)

– [The great AI hype correction of 2025 | MIT Technology Review](https://www.technologyreview.com/2025/12/15/1129174/the-great-ai-hype-correction-of-2025/)

– [NVIDIA announces new quantum-integrated computing architecture](https://www.nextgov.com/emerging-tech/2025/10/nvidia-announces-new-quantum-integrated-computing-architecture/409122/)

– [Liquid AI: Build efficient general-purpose AI at every scale.](https://www.liquid.ai/)

– [Are Businesses Ready for the Next Phase of AI — Self-Improving Models and the Road to AGI](https://t2conline.com/are-businesses-ready-for-the-next-phase-of-ai-self-improving-models-and-the-road-to-agi/)

– [Neuro-Symbolic AI: A Foundational Analysis of the Third Wave’s Hybrid Core](https://gregrobison.medium.com/neuro-symbolic-ai-a-foundational-analysis-of-the-third-waves-hybrid-core-cc95bc69d6fa)

– [Spiking Neural Networks: The Future of Brain-Inspired Computing](https://arxiv.org/abs/2510.27379)

– [This Light-Powered AI Chip Is 100x Faster Than a Top Nvidia GPU](https://singularityhub.com/2025/12/22/this-light-powered-ai-chip-is-100x-faster-than-a-top-nvidia-gpu/)

– [AI in the workplace: A report for 2025 – McKinsey](https://www.mckinsey.com/capabilities/tech-and-ai/our-insights/superagency-in-the-workplace-empowering-people-to-unlock-ais-full-potential-at-work)

– [Post by Shehab Ahmed on X about Large Concept Models](https://x.com/shehabOne1/status/1899938996542988794)

Recent Comments