Often lately, I hear people expressing a range of opinions about AI. One pattern I’m noticing is instead of referring to AI as “they,” they’re referring to AI as “it” and most often “Chat GPT.” I get it. OpenAI’s product is the most popular right now.

A few things I’d like people to know (some fact, some opinion, and some speculation):

(1) There are many competing LLMs (large language models) and some of the most popular/”intelligent” include GPT 4o, Claude 3.5-sonnet, Grok 2 (mostly uncensored), Google’s Gemini whatever, and Llama 3.1. Some runners up include Mixtral, Mistral, and new ones coming out monthly.

Note: I have a bias about OpenAI, the company behind GPT. Most of the founding big brains have left the company, some citing issues with ethics, safety, and politics. Another fun fact: Their new board includes representation from the NSA. Before you give them your money, I recommend checking out Anthropic’s 3.5 Sonnet and X’s Grok 2. Another bias is generally against Google and specifically about their latest AI, Gemini. So far, they have been exposed as faking benchmark demos and Gemini has also been shown to be hugely woke and inaccurate in terms of race, rewriting history in interesting ways.

(2) They are not “programmed.” They learn much like (not exactly, of course) a child learns, through a huge number of examples, but like x10,000 the speed of a human. Maybe faster. I’m just speculating. What some may call “programming” may be the filters (this is just one way of giving them bias) added as a layer so they have political or other biases. But no, this is not 1985 and they are not “programmed.” Take it from a programmer. That said, sure, semantics. Call it what you want.

(3) Artificial General Intelligence. No, they are not sentient right now. None of them are. What some are very close to is something called Artificial General Intelligence (AGI), which used to be the holy grail but that is so close that the next holy grail is already being worked on. I’ll talk about that later. Regarding AGI. Some (fewer and fewer) people still think/say it can’t be. But I think they are being short-sighted. Oh. Here is a definition of AGI:

“AGI, or Artificial General Intelligence, refers to AI systems that can match or exceed human-level intelligence across a wide range of cognitive tasks and domains, demonstrating general problem-solving abilities comparable to humans.”

(4) Sentience, self-awareness, artificial super-intelligence (ASI). Here is where most are skeptical. First, the most likely to come and come first is ASI. Why? Imagine the moment an ai is given the permissions and capability (both possible with current technology) to learn on its own (all current LLMs are static, think flash-frozen knowledge and do not have any learning capability past the session they are in, aside from some memory tricks so they may asked to remember your preferences, etc., but not true learning YET). Back to the point: When given the ability/command to “learn and improve yourself,” what do you think will happen? By the way, ASI doesn’t necessarily mean self awareness. IMO they are not mutually inclusive or exclusive. It just means super smart and super knowledgeable. Primarily just knowing more than they know now and being better at logic/reasoning.

A few I know of that are currently being developed:

– Liquid Neural Networks (MIT): Adapts structure based on incoming data, even post-training.

– Hybrid AI: Deep learning combined with symbolic AI.

– Mixture of Experts (MoE): This approach uses multiple specialized sub-networks (experts) that are dynamically selected based on the input, potentially allowing for more efficient processing of diverse tasks. This is something many already do, including myself.

– Graph Neural Networks (GNNs): These are designed to work with graph-structured data, which could be beneficial for tasks involving relational reasoning.

– Neuro-symbolic AI: This combines neural networks with symbolic reasoning, aiming to incorporate logical reasoning and background knowledge into learning systems.

– Capsule Networks: Proposed by Geoffrey Hinton, these aim to address some limitations of CNNs by encoding spatial relationships between features.

– Spiking Neural Networks (SNNs): These more closely mimic biological neural networks and could potentially be more energy-efficient than traditional artificial neural networks.

– Meta-learning – dunno what this is but it sounds cool!

Past that to sentience/self-awareness? I’m thinking new structures of the underlying neural networks will be required. Think of the current neural nets that the big LLMs use as like the structure of a monkey brain. This isn’t a perfect analogy because monkeys have some self-awareness but go with it for now. The new structures of neural nets will need to be more like evolving up from a monkey brain to a human brain. Many human brains with x10 intelligence of mine are currently working on different neural net structures to accomplish this and more.

(5) Doomsday. Yep… maybe. I can and do imagine some pretty horrific outcomes, near and far in the future. Economic being one already looming. Yes, they are taking jobs. No, your job is not safe, even if you are “creative.”

In fact, a thing that surprised even me (a coder from teen years and creator of EmpathyBot.net), was when the LLMs arrived on the scene they were more creative and pretty bad at math and logic! Opposite of what I expected! Anyway, it’s going to happen. I recommend learning to use them as tools in whatever you do, so you are at least relevant for now. And please don’t struggle to “prove me wrong” about what jobs are safe or not. I’m not talking NOW. Try real hard to see into the future. Don’t beat yourself up if that is hard for you. I probably read too many sci-fi books.

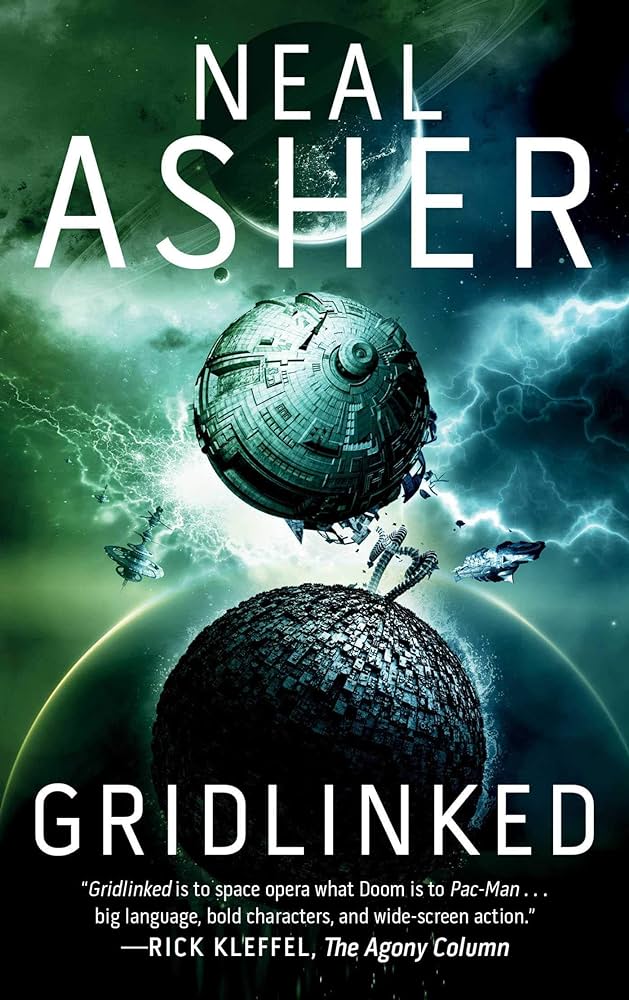

This book was my introduction to Neal Asher, my favorite sci-fi author. If you have never enjoyed reading, it might just be because you haven’t read good authors.

“But ain’t no ai gonna repair cars like I do at my shop.” Ever heard of robots? If doctors are already using ai and even ai-driven surgical robots being tested, then yes, human auto-mechanics will go obsolete at some point.

“I make music and you can tell when a song is made by AI. It will never be as good as humans.” Guess what? State of the art right now is pretty impressive and can even fool a non-trained ear. And they are improving monthly! I’m about to put out a music video that is composed of 15 ai-made songs. I wrote the lyrics but used one of the high end music generators to make the songs. Anyway, remember ASI (artificial super-intelligence)? ASI “musicians” will not only be able to produce music that convinces the best of musicians that it’s made by humans, but will go past that pretty quickly into a land we can’t quite see. I’m imagining music that will induce tears, laughter, and any other emotion IN SPITE OF YOU (your personality, your humor, your whatever).

And yes, that brings up another doomsday scenario. Imagine an ai so skilled at manipulating human emotions that… Yeah… some bad shtuff can happen.

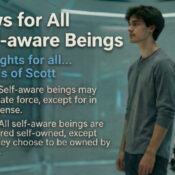

(6) Best outcome. This is part of why at the start I mentioned there are many competing AIs and this will hopefully, continue. Why? Study up on Austrian economics or even just basic competition in biology through capitalism, or decentralization, for that matter. I’m thinking we’ll all have (need to have?) our own personal AI that protects us from all the “bad” ones, as well as helps us navigate the rapidly changing world.

I’m thinking automation can (not for sure, because corruption, etc.) lead to a kind of super-abundance/low prices that we’ve never seen. I like to think that intelligence tends to correlate with peaceful actions, as in smart people know it is beneficial to them to be kind and supportive of others. I believe that is part of why humans have evolved to be kind, generally. Let’s hope AI follows that path.

More later on all this. I welcome all ideas, arguments, assertions of my brain being tiny or damaged, curses to my blood line, etc.

Recent Comments